Izotope Rx Remove Instrument

We are doing a lot of recording on-site (usually of solo piano, or piano with one other instrument) in locations where there are ambient noises. These noises can be anything from cars passing, or birds tweeting - through to (sometimes) aircraft overhead. We used iZotope RX for a few months using a rental option, and the results were very good. Each module in the RX Audio Editor features a preset menu that allows you to choose between factory presets and custom presets that you have saved. Any preset saved in a module in the RX Audio Editor can be opened in the corresponding RX plug-in, when applicable. Add Preset: Creates a new preset; Remove Preset: Removes a preset from the drop-down list. Some dialogue problems can’t be fixed. Distortion, errant sirens, wind gumming the microphones—it’s impossible. The truth is, with iZotope RX, you can fix all sorts of previously impossible problems. Here are six tips to get you out of a dialogue bind.

- Izotope Rx 7 Audio Editor Advanced

- Izotope Rx 7 Free Download

- Izotope Rx Remove Instrument Driver

- Izotope Rx Remove Instrument Software

- Izotope Rx 2 Free Download

- Looking to remix a song with a stellar vocal or create a mashup and need the acapella? This guide shows you how to isolate or remove vocals from a song. Learn how to isolate vocals with phase cancellation in both Ableton Live and Logic Pro X. You’ll also learn how to separate vocals from a song with Music Rebalance in iZotope RX 7.

- To add an iZotope effect plug-in to a track in Logic Pro, follow these steps: Click the 'Audio FX' button in the mixer channel strip of your audio or instrument track (this is the same button you would click to add Logic's own compressor or EQ, for instance).

- Aug 28, 2019 Steinberg SpectraLayers audio cleaning (vs iZotope RX) Hi guys We are doing a lot of recording on-site (usually of solo piano, or piano with one other instrument) in.

- Oct 25, 2019 Actually Izotope has RX wich can separate vocals from music, and even remove other kinds of stuff, using a spectrographic tool. Its an amazing technology used in.

Izotope Rx 7 Audio Editor Advanced

We are doing a lot of recording on-site (usually of solo piano, or piano with one other instrument) in locations where there are ambient noises. These noises can be anything from cars passing, or birds tweeting - through to (sometimes) aircraft overhead.

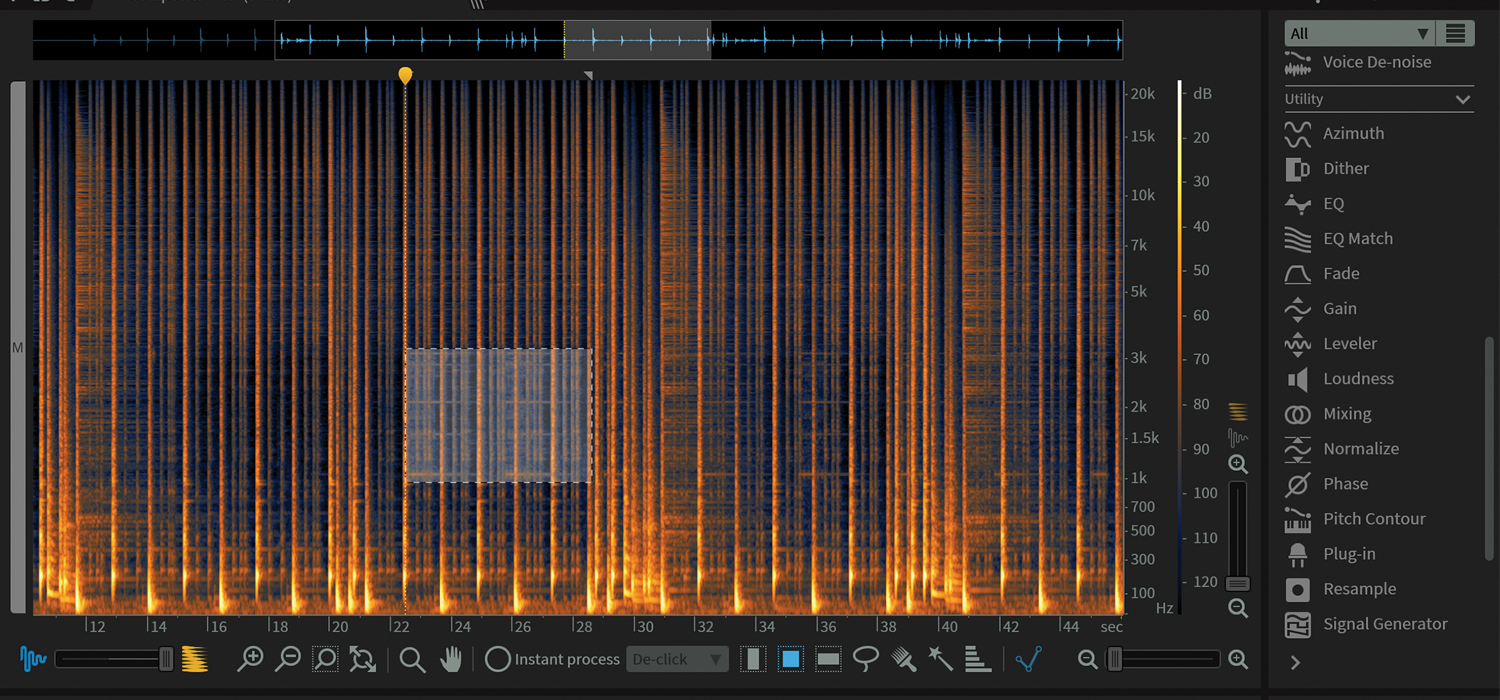

We used iZotope RX for a few months using a rental option, and the results were very good. We were able to us its spectral editing to pull out specific unwanted sounds, without and perceptible harm to the actual audio we wanted to keep. It even worked for exposed acoustic piano, which was very pleasing.

I'm now looking to but a spectral editing tool, and have seen that Steinberg offer SpectraLayers - which integrates with ARA within Cubase for seamless editing. It seems to feature the same spectral editing functionality as RX.

I'm keen to hear from anyone who might have used SpectraLayers. Is it effective? Have you compared it to RX, and is there any difference between the results you can get from the two tools?

Any first-hand experience and thoughts would be very much appreciated!

Cheers,

Mike

By inconspicuously attaching on clothing near a person’s mouth, the lavalier microphone (lav mic) provides multiple benefits when capturing dialogue. For video applications, there is no microphone distracting viewer attention, and the orator can move freely and naturally since they aren’t holding a microphone. Lav mics also benefit audio quality, since they are attached near the mouth they eliminate noise and reverberation from the recording environment.

Unfortunately, the freedom lav mics provide an orator to move around can also be a detriment to the audio engineer, as the mic can rub against clothing or bounce around creating disturbances often described as rustle. Here are some examples of lav-mic recordings where the person moved just a bit too much:

https://izotopetech.files.wordpress.com/2017/03/de-rustle-3.wavhttps://izotopetech.files.wordpress.com/2017/03/de-rustle.wav

Rustle cannot be easily removed using the existing De-noise technology found in an audio repair program such as iZotope RX, because rustle changes over time in unpredictable ways based on how the person wearing the microphone moves their body. The material the clothing is made of also can have an impact on the rustle’s sonic quality, and if you have the choice attaching it to natural fibers such as cotton or wool is preferred to synthetics or silk in terms of rustling intensity. Attaching the lav mic with tape instead of using a clip can also change the amount and sound of rustle.

Because of all these variations, rustle presents itself sonically in many different ways from high frequency “crackling” sounds to low frequency “thuds” or bumps. Additionally, rustle often overlaps with speech and is not well localized in time like a click or in frequency like electrical hum. These difficulties made it nearly impossible to develop an effective deRustle algorithm using traditional signal processing approaches. Fortunately, with recent breakthroughs in source separation and deep learning removing lav rustle with minimal artifacts is now possible.

Audio Source Separation

Often referred to as “unmixing”, source separation algorithms attempt to recover the individual signals composing a mix, e.g., separating the vocals and acoustic guitar from your favorite folk track. While source separation has applications ranging from neuroscience to chemical analysis, its most popular application is in audio, where it drew inspiration from the cocktail party effect in the human brain, which is what allows you to hear a single voice in a crowded room, or focus on a single instrument in an ensemble.

We can view removing lav mic rustle from dialogue recordings as a source separation problem with two sources: rustle and dialogue. Audio source separation algorithms typically operate in the frequency domain, where we separate sources by assigning each frequency component to the source that generated it. This process of assigning frequency components to sources is called spectral masking, and the mask for each separated source is a number between zero and one at each frequency. When each frequency component can belong to only one source, we call this a binary mask since all masks contain only ones and zeros. Alternatively, a ratio mask represents the percentage of each source in each time-frequency bin. Ratio masks can give better results, but are more difficult to estimate.

For example, a ratio mask for a frame of speech in rustle noise will have values close to one near the fundamental frequency and its harmonics, but smaller values in low-frequencies not associated with harmonics and in high frequencies where rustle noise dominates.

To recover the separated speech from the mask, we multiply the mask in each frame by the noisy magnitude spectrum, and then do an inverse Fourier transform to obtain the separated speech waveform.

Mask Estimation with Deep Learning

The real challenge in mask-based source separation is estimating the spectral mask. Because of the wide variety and unpredictable nature of lav mic rustle, we cannot use pre-defined rules (e.g., filter low frequencies) to estimate the spectral masks needed to separate rustle from dialogue. Fortunately, recent breakthroughs in deep learning have led to great improvements in our ability to estimate spectral masks from noisy audio (e.g., this interesting article related to hearing aids). In our case, we use deep learning to estimate a neural network that maps speech corrupted with with rustle noise (input) to separated speech and rustle (output).

Since we are working with audio we use recurrent neural networks, which are better at modeling sequences than feed-forward neural networks (the models typically used for processing images), and store a hidden state between time steps that can remember previous inputs when making predictions. We can think of our input sequence as a spectrogram, obtained by taking the Fourier transform of short-overlapping windows of audio, and we input them to our neural network one column at a time. We learn to estimate a spectral mask for separating dialogue from lav mic rustle by starting with a spectrogram containing only clean speech.

https://izotopetech.files.wordpress.com/2017/04/clean_speech.wavWe can then mix in some isolated rustle noise, to create a nosiy spectrogram where the true separated sources are known.

https://izotopetech.files.wordpress.com/2017/04/noisy_speech.wavWe then feed this noisy spectrogram to the neural network which outputs a ratio mask. By multiplying the ratio mask with the noisy input spectrogram we have an estimate of our clean speech spectrogram. We can then compare this estimated clean speech spectrogram with the original clean speech, and obtain an error signal which can be backpropagated through the neural network to update the weights. We can then repeat this process over and over again with different clean speech and isolated rustle spectrograms. Once training is complete we can feed a noisy spectrogram to our network and obtain clean speech.

Gathering Training Data

We ultimately want to use our trained network to generalize across any rustle corrupted dialogue an audio engineer may capture when working with a lav mic. To achieve this we need to make sure our network sees as many different rustle/dialogue mixtures as possible. Obtaining lots of clean speech samples is relatively easy; there are lots of datasets developed for speech recognition in addition to audio recorded for podcasts, video tutorials, etc. However, obtaining isolated rustle noises is much more difficult. Engineers go to great lengths to minimize rustle and recordings of rustle typically are heavily overlapped with speech. As a proof of concept, we used recordings of clothing or card shuffling from sound effects libraries as a substitute for isolated rustle.

https://izotopetech.files.wordpress.com/2017/04/cards_playing_cards_deal02_stereo.wav

These gave us promising initial results for rustle removal, but only worked well for rustle where the mic rubbed heavily over clothing. To build a general deRustle algorithm, we were going to have to record our own collection of isolated rustle.

We started by calling into the post production industry to obtain as many rustle corrupted dialogue samples as possible. This gave us an idea of the different qualities of rustle we would need to emulate in our dataset. Our sound design team then worked with different clothing materials, lav mounting techniques (taping and clipping), and motions from regular speech gestures to jumping and stretching to collect our isolated rustle dataset. Additionally, in machine learning any patterns can potentially be picked up by the algorithm, so we also varied things like microphone type and recording environment to make sure our algorithm didn’t specialize to a specific microphone frequency response for example. Here’s a greatest hits collection of some of the isolated rustle we used to train our algorithm:

https://izotopetech.files.wordpress.com/2017/04/rustle_training.wav

Debugging the Data

One challenge with machine learning is when things go wrong it’s often not clear what the root cause of the problem was. Your training algorithm can compile, converge, and appear to generalize well, but still behave strangely in the wild. For example, our first attempt at training a deRustle algorithm always output clean speech with almost no energy above 10 kHz, even though there was speech energy at those frequencies.

It turned out that a large percentage of our clean speech was recorded with a microphone that attenuated high frequencies. Here’s an example problematic clean speech spectrogram with almost no high-frequency energy:

Izotope Rx 7 Free Download

Since all of our rustle recordings had high frequency energy the algorithm learned to assign no high frequency energy to speech. Adding more high quality clean speech to our training set corrected this problem.

Before and After Examples

Once we got the problems with our data straightened out and trained the network for a couple days on a NVIDIA K80 GPU, we were ready to try it out removing rustle from some pretty messy real-world examples:

Stop teamviewer opening on startup mac os. As said on:This can be done with OSX, but there seems to be a bug that requires a particular work-around that I was lucky to notice.

Before

https://izotopetech.files.wordpress.com/2017/03/de-rustle.wavAfter

Izotope Rx Remove Instrument Driver

https://izotopetech.files.wordpress.com/2017/03/de-rustle_proc.wavBefore

Izotope Rx Remove Instrument Software

https://izotopetech.files.wordpress.com/2017/03/de-rustle-3.wavAfter

Izotope Rx 2 Free Download

https://izotopetech.files.wordpress.com/2017/03/de-rustle-3_proc.wavConclusion

While lav mics are an extremely valuable tool, if they move a bit too much the rustle they produce can drive you crazy. Fortunately, by leveraging advances in deep learning we were able to develop a tool to accurately remove this disturbance. If you’re interested in trying this deRustle algorithm give the RX 6 Advanced demo a try.